Fine-Tuning Llama 3.1 with SWIFT, Unsloth Alternative for Multi-GPU LLM Training

- Sep 15, 2024

- 8 min read

Updated: Sep 30, 2024

Unsloth has gained popularity as a library for fine-tuning large language models (LLMs). It significantly reduces both GPU memory usage and training time, while also offering some optimizations for inference. We covered Unsloth in detail in our earlier blog post, "How to Fine-Tune Llama 3.1 with Unsloth Twice as Fast and Using 70% Less GPU Memory."

However, Unsloth has its limitations. The most notable one is that it currently supports only single-GPU training. While the Unsloth team has announced that multi-GPU support is in beta testing, it is not yet available to the public.

So, what can you do if single-GPU training is not enough for your project—either because it's too slow or exceeds the capacity of even the most advanced GPUs? In this post, we introduce SWIFT, a robust alternative to Unsloth that enables efficient multi-GPU training for fine-tuning Llama 3.1.

What is SWIFT?

SWIFT is a cutting-edge library developed by ModelScope for training large language models (LLMs). It supports the full spectrum of tasks: pre-training, fine-tuning, reinforcement learning from human feedback (RLHF), inference, evaluation, and deployment. With SWIFT, you can work seamlessly with over 300 LLMs and more than 50 multimodal large models (MLLMs). This makes it a versatile tool for both research and production environments, enabling a complete workflow—from model training and evaluation to deployment and application.

SWIFT goes beyond basic training capabilities by supporting lightweight solutions like Parameter-Efficient Fine-Tuning (PEFT). It also includes a comprehensive Adapters library featuring the latest techniques such as NEFTune, LoRA+, and LLaMA-PRO. These adapters can be easily integrated into your own custom workflows, providing flexibility without the need to use SWIFT's specific training scripts.

To make the platform accessible even to those new to deep learning, SWIFT offers a user-friendly web interface powered by Gradio. This web UI simplifies control over training and inference processes and is complemented by courses and best practices designed for beginners. The SWIFT web UI is available on both Hugging Face Spaces and ModelScope Studio.

In this blog post, we will guide you through the process of fine-tuning Llama 3.1 using SWIFT on RunPod infrastructure. While these instructions focus on RunPod, they are also applicable to other GPU cloud providers, such as MassedCompute and Vast.AI, as well as major platforms like Google Cloud Platform (GCP), Amazon Web Services (AWS), and Microsoft Azure.

Set Up the RunPod Environment

To get started, log in to the RunPod console, navigate to "Pods," and click "Deploy." For this demonstration, we will use the Community Cloud. However, if you have strict security requirements, consider RunPod's Secure Cloud option. While it is slightly more expensive than the Community Cloud, it provides enhanced data security standards.

For our example, we will use a multi-GPU instance. We’ll select 2 x RTX A6000 GPUs, as each A6000 offers 48GB of GPU memory—sufficient for most smaller LLMs. However, the choice of GPU is flexible. RunPod provides a wide range of GPU types and configurations, including the powerful H100, allowing you to tailor your setup to your needs.

We will use the RunPod PyTorch 2.4.0 image, which is the latest version at the time of writing. If you are following this post later and opt for a newer PyTorch version, be mindful of potential environment mismatches. The development teams strive to keep the libraries updated with the latest versions of PyTorch, CUDA, Transformers, and other key components, but this can take time. If the newest PyTorch environment is not compatible with the latest SWIFT version, try downgrading the PyTorch and CUDA versions—this often resolves compatibility issues.

To optimize costs, we will use Spot instances for this exercise. Spot instances are significantly cheaper than regular ones, but be aware of the risk: unlike MassedCompute, which provides a 1-hour termination notice for spot instances, RunPod may terminate them without warning.

Lastly, keep the "Start Jupyter Notebook" checkbox selected. We will use Jupyter Notebook for this demonstration, so having it pre-installed is convenient.

When working with GPUs, you will need additional storage. RunPod offers two types of storage:

Container Disk: This is temporary storage that is cleared each time you stop the container. If you plan to stop and restart your containers, avoid storing important project data on the Container Disk, as it will be lost when the container is stopped.

Volume Disk: This is persistent storage that retains data even if the container is restarted or a Spot instance is terminated. By default, the Volume Disk is mounted at the /workspace directory, making it the ideal location for storing sensitive project data.

To adjust storage for our LLM training project, click the "Edit Template" button and increase the Volume Disk size to a suitable capacity. For this demonstration, we will set it to 256 GB. This will provide ample space for saving the final model and training checkpoints securely.

You will also need to allocate space for the Container Disk unless you want to reconfigure all caching and temporary file storage to the /workspace directory. Although this setup is not ideal, storage costs are relatively low, so it won't impact your budget significantly. For simplicity, we will also increase the Container Disk size to 256 GB.

Once your storage settings are configured, click "Deploy Spot." Your instance will be ready shortly and will appear under the "Pods" section in the RunPod console.

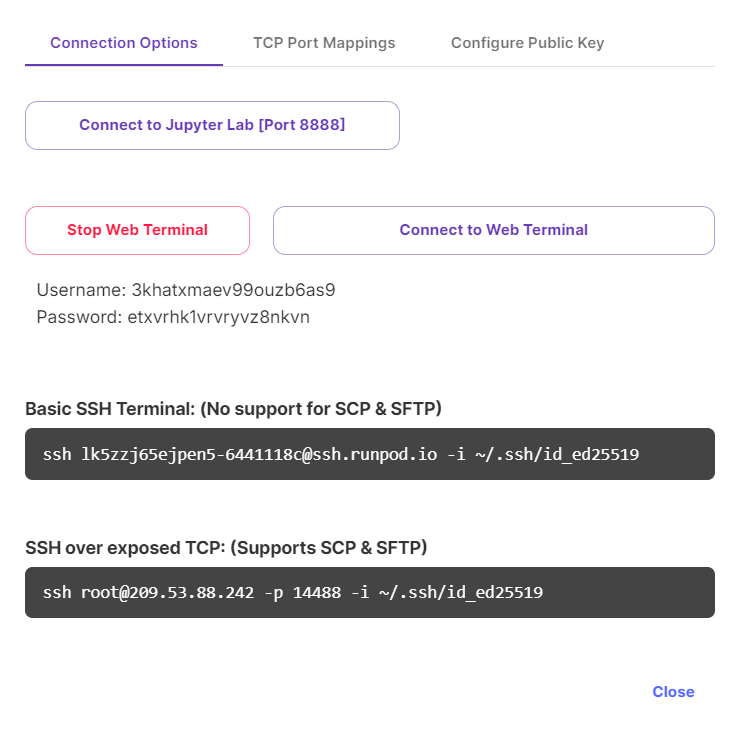

RunPod offers several connection options, including SSH and a Web Terminal accessible via your web browser. Choose the option that best suits your workflow.

Finally, to verify that the two RTX A6000 GPUs are available, use the nvidia-smi command.

Installing SWIFT

While the official SWIFT documentation suggests a straightforward installation using the PyPI package. Yet, if you run it as of September 2024 and try to import packages, you will encounter the following error.

Failed to import swift.trainers.trainers because of the following error (look up to see its traceback):

Failed to import modelscope.msdatasets because of the following error (look up to see its traceback):

cannot import name 'ftp_head' from 'datasets.utils.file_utils' (/usr/local/lib/python3.11/dist-packages/datasets/utils/file_utils.py)To fit it, install SWIFT by running the following command:

pip install --upgrade --force-reinstall --no-cache-dir git+https://github.com/modelscope/ms-swift.git@88dab2b6625280e813bd8835662e1d2be24a3132After installing SWIFT, click "Connect to Jupyter Lab" in the RunPod console. This will open Jupyter Lab in a new tab, allowing you to proceed with your work.

Training Llama 3.1 with SWIFT

To begin training Llama 3.1 with SWIFT, start by creating a new Jupyter Notebook. Test your installation by running the following import commands:

from swift import Trainer, TrainingArgumentsIf the import is successful, congratulations—you have a fully functional SWIFT installation and are ready to proceed!

First, let's perform the necessary imports.

from swift import Trainer, TrainingArguments

from modelscope import MsDataset, AutoTokenizer

from modelscope import AutoModelForCausalLM

from modelscope import snapshot_download

from swift import Swift, LoraConfig, get_peft_model

from swift.llm import get_template, TemplateType, register_template, Template, TEMPLATE_MAPPING

import torch

import wandb

from datasets import load_dataset

from transformers import BitsAndBytesConfig

from trl import SFTTrainer, DataCollatorForCompletionOnlyLMUnlike some other frameworks, SWIFT is designed to work with models hosted on ModelScope, not Hugging Face. Therefore, to use Llama 3.1, we need to locate it on ModelScope:

model_name = 'LLM-Research/Meta-Llama-3.1-8B-Instruct'

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.bfloat16, device_map='auto', trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)We should also define a padding token. We will use *** for this purpose.

tokenizer.pad_token_id = 12488Next, we need to load the dataset. For the consistency's sake, we will use the same dataset we used for fine-tuning Llama 3.1 with Unsloth:

train_set = load_dataset("yahma/alpaca-cleaned", split = "train")Processing Training Examples for Consistent Length

SWIFT offers a variety of pre-built LLM prompt templates. It also allows you to create custom templates tailored to your needs. Yet, template-based dataset processing does not have default truncation and padding parameters, and the documentation does not clearly explain how to enable them.

When using multiple training examples per GPU, all examples must be of the same length. To ensure this, we will process the examples ourselves.

We will introduce three functions for this task:

create_prompt_formats() converts dataset dictionary items into Llama 3.1 prompts. If the test flag is set to True, it also adds the desired response.

preprocess_batch() converts these prompts into a list of tokens, padding or truncating them to max_length to ensure they are all the same length.

preprocess_dataset() processes the dataset and prepares it for training.

def create_prompt_formats(sample, test=False):

result = f"""<|start_header_id|>system<|end_header_id|>

You are a helpful assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>

{sample['instruction']}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

if not test:

result += f"""{sample['output']}<|eot_id|>"""

sample["prompt"] = result

return sampledef preprocess_batch(batch, tokenizer, max_length, truncation, padding):

return tokenizer(

batch['prompt'],

max_length=max_length,

truncation=truncation,

padding='max_length' if padding else False

)def preprocess_dataset(tokenizer, max_length, seed, dataset, truncation, padding, test):

print("Preprocessing dataset...")

_create_prompt_formats = partial(create_prompt_formats, test=test)

dataset = dataset.map(_create_prompt_formats)

_preprocessing_function = partial(preprocess_batch, max_length=max_length, tokenizer=tokenizer, truncation=truncation, padding=padding)

dataset = dataset.map(

_preprocessing_function,

batched=True

)

dataset = dataset.shuffle(seed=seed)

return datasetNext, we need to determine the optimal maximum length for the training examples. While Llama 3.1 supports a context window of up to 128k tokens, using the maximum size for training examples is inefficient if the actual text is much shorter. Large amounts of padding can slow down training significantly. Therefore, we want to keep the examples as short as possible while ensuring we do not cut off too much of the data.

To find the appropriate maximum length, we process the dataset with the context window size limit but without truncation or padding. This allows us to observe the actual length distribution of the examples:

exploration_set = preprocess_dataset(tokenizer=tokenizer, max_length=128000, seed=seed, dataset=train_set, truncation=False, padding=False, test=False)

lengths = [len(entry['input_ids']) for entry in exploration_set]

plt.hist(lengths, bins=30, color='blue', edgecolor='black')

plt.title('Histogram of Lengths of input_ids')

plt.xlabel('Length of input_ids')

plt.ylabel('Frequency')

plt.show()As shown in the histogram, most examples in the Alpaca dataset, after conversion to Llama 3.1 prompt format, are under 700 tokens. To be safe, we will set the maximum length to 700 tokens and reprocess the dataset:

train_set_processed = preprocess_dataset(tokenizer=tokenizer, max_length=max_length, seed=seed, dataset=train_set, truncation=True, padding=True, test=False)Now, let's plot the distribution again to confirm that all examples are of the same length—700 tokens:

It is always a good idea to decode a few processed training examples to ensure they look correct. This helps verify that the preprocessing has been done properly:

tokenizer.decode(train_set_processed[0]['input_ids'])With the dataset preprocessing complete, we can now set up the LoRA (Low-Rank Adaptation) parameters.

Configuring LoRA Parameters

To configure LoRA for fine-tuning, we define the LoraConfig parameters:

lora_config = LoraConfig(

r=4,

target_modules=["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj"],

lora_alpha=8,

lora_dropout=0.05)

model = Swift.prepare_model(model, lora_config)Setting Up the Data Collator

It's a good practice to perform baseline training focused on completion to avoid overfitting on the input sequences. To do this, we create a DataCollator and specify the start of the model's response:

response_template = "<|start_header_id|>assistant<|end_header_id|>"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)Defining the Training Configuration

Now, we define the training configuration using TrainingArguments. This configuration specifies parameters like the output directory, learning rate, number of training epochs, evaluation steps, and more:

train_args = TrainingArguments(

output_dir='output',

learning_rate=1e-4,

num_train_epochs=2,

eval_steps=500,

save_steps=500,

optim="adamw_8bit",

fp16=True,

evaluation_strategy='steps',

save_strategy='steps',

log_level="debug",

dataloader_num_workers=16,

per_device_train_batch_size=1,

gradient_accumulation_steps=16,

logging_steps=1,

)

Initializing the Trainer

With the model and configuration in place, we initialize the Trainer:

trainer = Trainer(

model=model,

args=train_args,

train_dataset=train_set_processed,

tokenizer=tokenizer,

data_collator=collator

)Start Training

Now, everything is set up, and we are ready to start the training process:

trainer.train()While the training is running, it's useful to monitor GPU utilization. You can do this with the nvidia-smi command:

You can also find the complete code for this tutorial in our Colab.

Conclusion

Developing your custom LLM could enhance data security and compliance and enable an AI competitive advantage for your product. You can check our other posts to get an extensive explanation of what the network effect is and how AI enables it, how to build an AI competitive advantage for your company, what culture helps you build the right AI products, what to avoid in your AI strategy and execution, and more.

If you need help in building an AI product for your business, look no further. Our team of AI technology consultants and engineers have decades of experience in helping technology companies like yours build sustainable competitive advantages through AI technology. From data collection to algorithm development, we can help you stay ahead of the competition and secure your market share for years to come.

Contact us today to learn more about our AI technology consulting offering.

If you want to keep posted on how to build a sustainable competitive advantage with AI technologies, please subscribe to our blog post updates below.

Comments